Supply chain predictive analytics adoption continues to rise as 62% of companies implement AI for sustainability tracking and measurement. The industry faces an interesting paradox. The global supply chain management market will grow from USD 23.58 billion in 2023 to USD 63.77 billion by 2032, yet organizations struggle with a basic problem: poor data quality.

AI and supply chain optimization create undeniable benefits. Companies that use AI in their supply chain operations cut costs by 20% and boost revenue by 10%. Predictive analytics solutions for supply chains hold great potential, but they need high-quality data to work well. Our research reveals that scattered data in old systems hurts the performance of supply chain analytics applications. GenAI models will power 25% of KPI reporting by 2028, but many companies can’t take full advantage of these advances because of data quality issues.

Study Reveals Data Quality Gaps Undermine Predictive Analytics

“You can have all of the fancy tools, but if [your] data quality is not good, you’re nowhere.” — Thomas C. Redman, Data quality expert, known as ‘the Data Doc’, author and consultant

Research shows a crucial gap between AI ambitions and data reality in supply chains. A startling 81% of AI professionals say their companies don’t deal very well with data quality problems. Companies keep investing heavily in predictive analytics technologies without fixing these basic issues.

The difference between perception and reality raises serious concerns. About 90% of director and manager-level data professionals think their company’s leadership doesn’t pay enough attention to data quality problems. This disconnect between operational teams and executives creates a dangerous blind spot in supply chain management.

Supply chain analytics face common data quality problems like missing data points, broken or poorly adjusted sensors, incomplete data mappings, incompatible systems, and architectural limitations. These challenges become worse in manufacturing environments where sensors work under physically demanding conditions.

Bad data quality hits companies hard financially. Studies show typical organizations lose between 8% and 12% of revenue due to data quality problems. Service organizations might spend up to 40% to 60% more. This adds up to billions of dollars in yearly losses across industries.

Data quality problems directly hurt predictive capabilities. McKinsey’s research shows supply chain statistical models using high-quality data can save 3-8% in costs. Models produce unreliable results and waste money when data has flaws.

These problems continue as only 15% of people trust their systems to produce clean, reliable data. The situation keeps getting worse – 20% more respondents in 2018 spent over one-quarter of their day looking for data compared to the previous year.

Organizations face a tough situation. Almost half (47%) of AI professionals worry their companies have put too much money into AI models that don’t work well. This forces them to rethink how they approach predictive analytics. Even sophisticated supply chain analytics projects will keep falling short if they don’t fix the mechanisms of data quality first.

Why Predictive Analytics Depend on High-Quality Data

The “garbage in, garbage out” principle defines how supply chain predictive analytics work. AI models can’t deliver reliable results without high-quality data. Organizations capture only 56% of their potentially valuable data. From this captured data, 77% turns out to be redundant, obsolete, trivial, or completely unclassified. This leaves a mere 23% of “good data” available for AI-driven business processes.

Data silos create a major roadblock for predictive analytics to work. Supply chain teams often work in isolation and make decisions based on outdated, static information. Their confidence and accuracy suffer in the decision-making process. New solutions purchased without proper integration plans fail to communicate with each other. This creates bottlenecks throughout the supply chain.

Quality issues that plague predictive analytics include:

Missing values that bias predictions by excluding important information

Inconsistent formats across systems that create errors during analysis

Measurement errors that introduce noise into sensor readings

Temporal relevance problems when data from atypical events skews predictions

Predictive models need historical or live data to spot patterns and create forecasts. The model’s predictions become unreliable whatever the algorithmic sophistication when input data has flaws—through errors, inconsistencies, or gaps.

Poor data quality hits companies financially. It leads to overproduction, excess inventory, higher holding costs, and lost sales. Supply chain forecasting’s data quality directly affects inventory optimization, demand sensing, and risk management capabilities.

Supply chain data must be accurate, detailed, and properly managed to discover the full potential of predictive analytics. Teams need to monitor, refine, and normalize datasets continuously while removing errors and outliers . Yes, it is better to establish data quality checks early. This reduces technical debt and minimizes the risk of getting pricey model retraining later.

Companies looking to make use of supply chain predictive analytics’ full potential should focus on data integration. This breaks down silos and ensures teams can access live information instead of outdated reports.

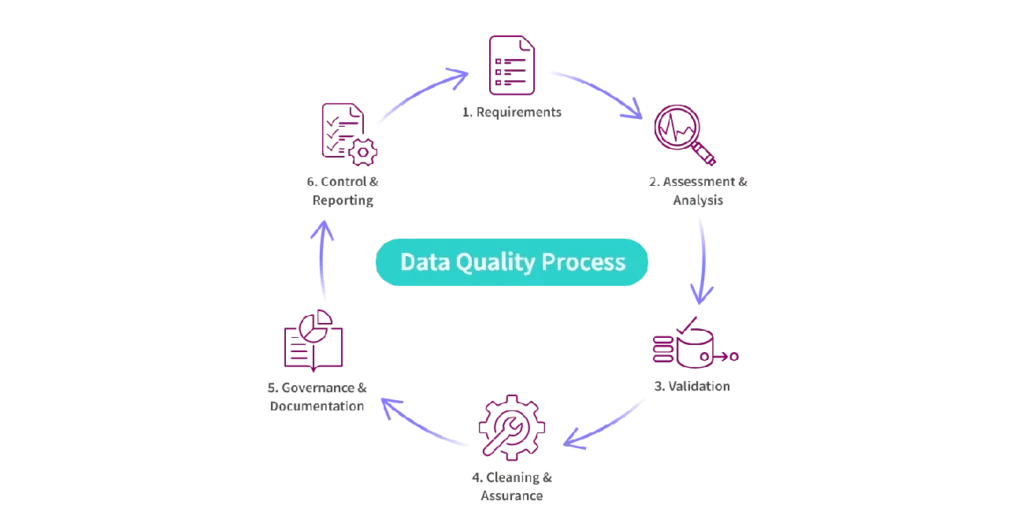

What Supply Chain Leaders Can Do to Improve Data Quality

“If your current systems are giving you inaccurate reports will investing in big data mean you will still get inaccurate reports but just with more data?” — Dave Waters, Supply chain and AI thought leader, educator

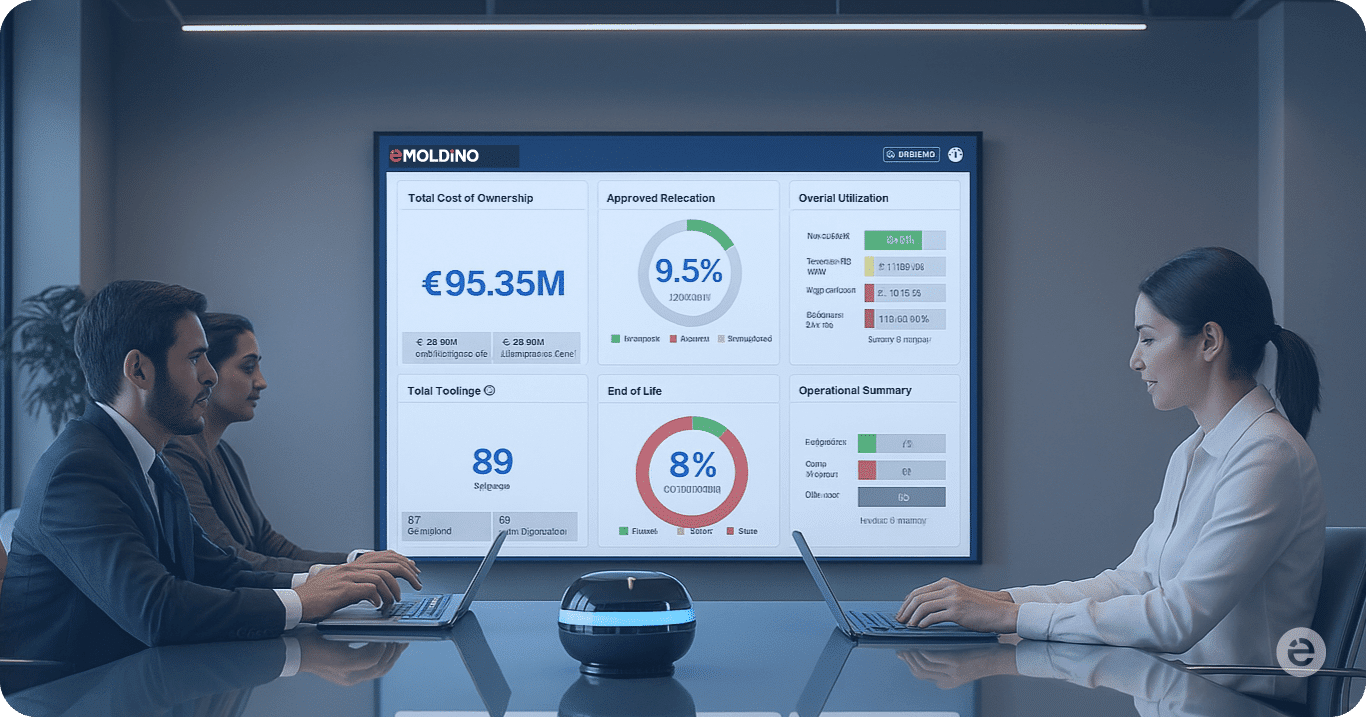

Data governance serves as the life-blood of successful supply chain predictive analytics. Supply chain leaders who face quality problems should start by identifying their current systems, including Transportation Management Systems (TMS), Warehouse Management Systems (WMS), and ERPs. A thorough inventory of these systems reveals data silos and information flow patterns between them.

Strong data governance policies mark a crucial advancement. Clear roles and responsibilities for data ownership, security, and privacy should be defined by organizations. These policies need to specify data ownership, collection methods, storage, and usage guidelines. Companies with strong cross-functional communication show 30% faster response to supply chain disruptions compared to siloed operations.

Supply chain leaders should focus on these validation strategies to ensure data accuracy:

Regular audits and validation procedures to verify data at entry points

Data cleansing to systematically remove outdated or incorrect information

Structured validation processes that include checking data structure, quality, and eligibility

Breaking down organizational silos depends heavily on cross-functional teams. A McKinsey study shows that companies with highly integrated supply chain operations achieved 20% higher efficiency rates than fragmented structures. Teams with members from multiple functional areas help create direct communication between departments.

Smart factory analytics solutions can connect ERP/MRP systems with individual teams. This ensures everyone works with identical updated information. The integration creates centralized reporting and dashboards that offer up-to-the-minute data analysis of key metrics.

Employee training on data management best practices holds equal importance. Organizations should invest in programs that teach data scientists about supply chain principles and supply chain professionals about data science.

Organizations that prioritize data quality can reduce operational risks and costs by 60%. This approach has ended up reshaping supply chain predictive analytics from a promise into a powerful decision-making tool.

Conclusion: The Data Quality Imperative for Supply Chain Analytics Success

Our analysis shows that data quality is the foundation for supply chain predictive analytics to work. The market might grow to USD 63.77 billion by 2032, but companies don’t deal very well with basic data quality challenges that prevent AI from reaching its full potential. Of course, 81% of AI professionals point to data quality as their biggest problem, which shows how deep this issue runs across industries.

Money is at stake here. Bad data quality costs companies 8-12% of their revenue, and service companies might waste up to 60% of their expenses on these issues. All the same, supply chain leaders who make data quality their priority before jumping into advanced analytics can find solutions.

Good data governance protects against quality problems best. Regular audits, data cleansing, and well-laid-out validation processes build reliable analytics foundations. Teams that work across departments deepen their commitment by breaking down walls that split vital supply chain information.

Supply chain leaders who tackle these data quality challenges before spending big on AI gain a real edge over competitors. The path to quality data needs time and money, but AI solutions done right can cut costs by 20% and boost revenue by 10%. Supply chain professionals who want to learn about better data management can find specialized tools on eMoldino’s website.

Quality data matters more than fancy AI algorithms for predictive analytics to succeed. Companies that understand and act on this fact will reshape their supply chains from reactive to predictive operations that can handle our complex global market better.

关于作者

eMoldino

eMoldino

eMoldino 致力于数字化、简化和改造您的制造和供应链运营。我们帮助全球制造商推动企业创新,同时保持协作和可持续发展的核心价值。 请与我们联系,了解更多信息 →

您喜欢阅读这篇文章吗?

0 /5.计票: 0

浏览我们的最新文章